it can be seen that there are a lot of green ( no signal ) bars which, during the randomisation test, can be selected and give equal or greater returns than the signal bars ( blue for longs, red for shorts ). The relative sparsity of the signal bars compared to non-signal bars gives the permutation test, in this instance, low power to detect significance, although I am not able to show that this is actually true in this case.

In the light of the above I decided to conduct a different test, the .m code for which is shown below.

clear all ;

load all_strengths_quad_smooth_21 ;

all_random_entry_distribution_results = zeros( 21 , 3 ) ;

tic();

for ii = 1 : 21

clear -x ii all_strengths_quad_smooth_21 all_random_entry_distribution_results ;

if ii == 1

load audcad_daily_bin_bars ;

mid_price = ( audcad_daily_bars( : , 3 ) .+ audcad_daily_bars( : , 4 ) ) ./ 2 ; mid_price_rets = [ 0 ; diff( mid_price ) ] ;

base_ix = 6 ; term_ix = 7 ;

end

if ii == 2

load audchf_daily_bin_bars ;

mid_price = ( audchf_daily_bars( : , 3 ) .+ audchf_daily_bars( : , 4 ) ) ./ 2 ; mid_price_rets = [ 0 ; diff( mid_price ) ] ;

base_ix = 6 ; term_ix = 4 ;

end

if ii == 3

load audjpy_daily_bin_bars ;

mid_price = ( audjpy_daily_bars( : , 3 ) .+ audjpy_daily_bars( : , 4 ) ) ./ 2 ; mid_price_rets = [ 0 ; diff( mid_price ) ] ;

base_ix = 6 ; term_ix = 5 ;

end

if ii == 4

load audusd_daily_bin_bars ;

mid_price = ( audusd_daily_bars( : , 3 ) .+ audusd_daily_bars( : , 4 ) ) ./ 2 ; mid_price_rets = [ 0 ; diff( mid_price ) ] ;

base_ix = 6 ; term_ix = 1 ;

end

if ii == 5

load cadchf_daily_bin_bars ;

mid_price = ( cadchf_daily_bars( : , 3 ) .+ cadchf_daily_bars( : , 4 ) ) ./ 2 ; mid_price_rets = [ 0 ; diff( mid_price ) ] ;

base_ix = 7 ; term_ix = 4 ;

end

if ii == 6

load cadjpy_daily_bin_bars ;

mid_price = ( cadjpy_daily_bars( : , 3 ) .+ cadjpy_daily_bars( : , 4 ) ) ./ 2 ; mid_price_rets = [ 0 ; diff( mid_price ) ] ;

base_ix = 7 ; term_ix = 5 ;

end

if ii == 7

load chfjpy_daily_bin_bars ;

mid_price = ( chfjpy_daily_bars( : , 3 ) .+ chfjpy_daily_bars( : , 4 ) ) ./ 2 ; mid_price_rets = [ 0 ; diff( mid_price ) ] ;

base_ix = 4 ; term_ix = 5 ;

end

if ii == 8

load euraud_daily_bin_bars ;

mid_price = ( euraud_daily_bars( : , 3 ) .+ euraud_daily_bars( : , 4 ) ) ./ 2 ; mid_price_rets = [ 0 ; diff( mid_price ) ] ;

base_ix = 2 ; term_ix = 6 ;

end

if ii == 9

load eurcad_daily_bin_bars ;

mid_price = ( eurcad_daily_bars( : , 3 ) .+ eurcad_daily_bars( : , 4 ) ) ./ 2 ; mid_price_rets = [ 0 ; diff( mid_price ) ] ;

base_ix = 2 ; term_ix = 7 ;

end

if ii == 10

load eurchf_daily_bin_bars ;

mid_price = ( eurchf_daily_bars( : , 3 ) .+ eurchf_daily_bars( : , 4 ) ) ./ 2 ; mid_price_rets = [ 0 ; diff( mid_price ) ] ;

base_ix = 2 ; term_ix = 4 ;

end

if ii == 11

load eurgbp_daily_bin_bars ;

mid_price = ( eurgbp_daily_bars( : , 3 ) .+ eurgbp_daily_bars( : , 4 ) ) ./ 2 ; mid_price_rets = [ 0 ; diff( mid_price ) ] ;

base_ix = 2 ; term_ix = 3 ;

end

if ii == 12

load eurjpy_daily_bin_bars ;

mid_price = ( eurjpy_daily_bars( : , 3 ) .+ eurjpy_daily_bars( : , 4 ) ) ./ 2 ; mid_price_rets = [ 0 ; diff( mid_price ) ] ;

base_ix = 2 ; term_ix = 5 ;

end

if ii == 13

load eurusd_daily_bin_bars ;

mid_price = ( eurusd_daily_bars( : , 3 ) .+ eurusd_daily_bars( : , 4 ) ) ./ 2 ; mid_price_rets = [ 0 ; diff( mid_price ) ] ;

base_ix = 2 ; term_ix = 1 ;

end

if ii == 14

load gbpaud_daily_bin_bars ;

mid_price = ( gbpaud_daily_bars( : , 3 ) .+ gbpaud_daily_bars( : , 4 ) ) ./ 2 ; mid_price_rets = [ 0 ; diff( mid_price ) ] ;

base_ix = 3 ; term_ix = 6 ;

end

if ii == 15

load gbpcad_daily_bin_bars ;

mid_price = ( gbpcad_daily_bars( : , 3 ) .+ gbpcad_daily_bars( : , 4 ) ) ./ 2 ; mid_price_rets = [ 0 ; diff( mid_price ) ] ;

base_ix = 3 ; term_ix = 7 ;

end

if ii == 16

load gbpchf_daily_bin_bars ;

mid_price = ( gbpchf_daily_bars( : , 3 ) .+ gbpchf_daily_bars( : , 4 ) ) ./ 2 ; mid_price_rets = [ 0 ; diff( mid_price ) ] ;

base_ix = 3 ; term_ix = 4 ;

end

if ii == 17

load gbpjpy_daily_bin_bars ;

mid_price = ( gbpjpy_daily_bars( : , 3 ) .+ gbpjpy_daily_bars( : , 4 ) ) ./ 2 ; mid_price_rets = [ 0 ; diff( mid_price ) ] ;

base_ix = 3 ; term_ix = 5 ;

end

if ii == 18

load gbpusd_daily_bin_bars ;

mid_price = ( gbpusd_daily_bars( : , 3 ) .+ gbpusd_daily_bars( : , 4 ) ) ./ 2 ; mid_price_rets = [ 0 ; diff( mid_price ) ] ;

base_ix = 3 ; term_ix = 1 ;

end

if ii == 19

load usdcad_daily_bin_bars ;

mid_price = ( usdcad_daily_bars( : , 3 ) .+ usdcad_daily_bars( : , 4 ) ) ./ 2 ; mid_price_rets = [ 0 ; diff( mid_price ) ] ;

base_ix = 1 ; term_ix = 7 ;

end

if ii == 20

load usdchf_daily_bin_bars ;

mid_price = ( usdchf_daily_bars( : , 3 ) .+ usdchf_daily_bars( : , 4 ) ) ./ 2 ; mid_price_rets = [ 0 ; diff( mid_price ) ] ;

base_ix = 1 ; term_ix = 4 ;

end

if ii == 21

load usdjpy_daily_bin_bars ;

mid_price = ( usdjpy_daily_bars( : , 3 ) .+ usdjpy_daily_bars( : , 4 ) ) ./ 2 ; mid_price_rets = [ 0 ; diff( mid_price ) ] ;

base_ix = 1 ; term_ix = 5 ;

end

% the returns vectors suitably alligned with position vector

mid_price_rets = shift( mid_price_rets , -1 ) ;

sma2 = sma( mid_price_rets , 2 ) ; sma2_rets = shift( sma2 , -2 ) ; sma3 = sma( mid_price_rets , 3 ) ; sma3_rets = shift( sma3 , -3 ) ;

all_rets = [ mid_price_rets , sma2_rets , sma3_rets ] ;

% delete burn in and 2016 data ( 2016 reserved for out of sample testing )

all_rets( 7547 : end , : ) = [] ; all_rets( 1 : 50 , : ) = [] ;

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% simple divergence strategy - be long the uptrending and short the downtrending currency. Uptrends and downtrends determined by crossovers

% of the strengths and their respective smooths

smooth_base = smooth_2_5( all_strengths_quad_smooth_21(:,base_ix) ) ; smooth_term = smooth_2_5( all_strengths_quad_smooth_21(:,term_ix) ) ;

test_matrix = ( all_strengths_quad_smooth_21(:,base_ix) > smooth_base ) .* ( all_strengths_quad_smooth_21(:,term_ix) < smooth_term) ; % +1 for longs

short_vec = ( all_strengths_quad_smooth_21(:,base_ix) < smooth_base ) .* ( all_strengths_quad_smooth_21(:,term_ix) > smooth_term) ; short_vec = find( short_vec ) ;

test_matrix( short_vec ) = -1 ; % -1 for shorts

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% delete burn in and 2016 data

test_matrix( 7547 : end , : ) = [] ; test_matrix( 1 : 50 , : ) = [] ;

[ ix , jx , test_matrix_values ] = find( test_matrix ) ;

no_of_signals = length( test_matrix_values ) ;

% the actual returns performance

real_results = mean( repmat( test_matrix_values , 1 , size( all_rets , 2 ) ) .* all_rets( ix , : ) ) ;

% set up for randomisation test

iters = 5000 ;

imax = size( test_matrix , 1 ) ;

rand_results_distribution_matrix = zeros( iters , size( real_results , 2 ) ) ;

for jj = 1 : iters

rand_idx = randi( imax , no_of_signals , 1 ) ;

rand_results_distribution_matrix( jj , : ) = mean( test_matrix_values .* all_rets( rand_idx , : ) ) ;

endfor

all_random_entry_distribution_results( ii , : ) = ( real_results .- mean( rand_results_distribution_matrix ) ) ./ ...

( 2 .* std( rand_results_distribution_matrix ) ) ;

endfor % end of ii loop

toc()

save -ascii all_random_entry_distribution_results all_random_entry_distribution_results ;

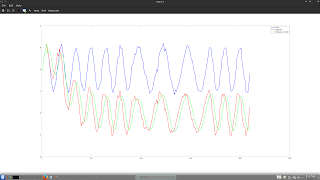

plot(all_random_entry_distribution_results(:,1),'k','linewidth',2,all_random_entry_distribution_results(:,2),'b','linewidth',2,...

all_random_entry_distribution_results(:,3),'r','linewidth',2) ; legend('1 day','2 day','3 day');The first chart shows the results of the unsmoothed currency strength indicator and the second the smoothed version. From this I surmise that the delay introduced by the smoothing is/will be detrimental to performance and so for the nearest future I shall be working on improving the smoothing algorithm used in the indicator calculations.